We need an impact source code for AI. How we structure the business of AI, along with the algorithms, could be the most consequential business decisions of our, or all, time.

It may surprise you who is championing the cause of social responsibility in the AI business.

Last week, Elon Musk sued OpenAI, which he helped launch in 2015 before leaving in 2018. The Tesla founder and X, formerly Twitter, owners, accused the company of failing to operate in the public interest. OpenAI, in theory, is controlled by a mission-driven nonprofit.

Musk’s suit contends that OpenAI’s structure was meant to ensure total transparency of its source code for the benefit of humanity, and that its for-profit subsidiary violated this commitment by granting exclusive rights to Microsoft, OpenAI’s major investor.

Backing Musk up is venture capitalist Marc Andreessen, who suggested that, given the dangers of AI, including security risks akin to nuclear weapons, OpenAI should be nationalized, before walking back his comments. Andreessen voiced support for Musk’s call for AI software to be released under open-source licenses.

That’s right: two of the great libertarian tech whales of our time – sworn enemies of ESG, woke capitalism and impact investing – say they want AI to be controlled for the benefit of humanity, not necessarily for free market capitalist profits.

Stakeholder accountability

Musk and Andreessen are positioning themselves as saviors, protecting us from their rapacious peers (although we should, of course, question whether they also have their own competing commercial interests wrapped in their defense of humanity).

In Musk’s case, he has even incorporated his own AI company, xAI, as a Delaware public benefit corporation (as have the founders of at least two other major AI start-ups, Anthropic and Inflection). That makes the companies legally accountable for balancing the interests of their stakeholders with the financial interests of their shareholders.

Other titans of tech have lined up on either side of this battle about the future of AI, and according to some, humanity. This week, Ron Conway, an influential venture capitalist, organized tech and AI leaders, including Open AI, Google, Salesforce, and Meta to rebut Musk’s central claims. They signed a short letter attesting to the benefits of AI and “committing” to building AI that will contribute to a better future for humanity:

“We all have something to contribute to shaping AI’s future, from those using it to create and learn, to those developing new products and services on top of the technology, to those using AI to pursue new solutions to some of humanity’s biggest challenges, to those sharing their hopes and concerns for the impact of AI on their lives,” the letter reads. “AI is for all of us, and all of us have a role to play in building AI to improve people’s lives.”

This letter reminds me of when, in 2019, the Business Roundtable issued a statement pronouncing the end of shareholder primacy and a new era of stakeholder capitalism. Those CEOs said they were now in business to benefit society, not just their shareholders. But when investors and The Wall Street Journal critiqued them for undermining free market capitalism, they were quick to backtrack, saying they wouldn’t actually be doing anything differently. They made especially clear via their corporate attorneys that they had not been suggesting they should actually be governed or measured according to their new ideas.

Similarly, there is not a single commitment in the AI letter to practice business differently, to be transparent, or to be held accountable. They haven’t even pretended to use language like Google’s former motto: “Don’t be evil.”

What has this got to do with stakeholder capitalism or impact investing or responsible business? Everything!

Unknown consequences

Most readers of ImpactAlpha want to do more than make a few investments that have a positive impact. We need systems change toward an inclusive, equitable, regenerative economic system that creates benefit for all and for the long term. That requires business, and the capital markets that support it, to have a purpose beyond profit – to create a positive impact on society, to be legally bound to that purpose, and to be held accountable by investors with the credible, transparent information necessary to do so.

Artificial Intelligence is arguably the most consequential technology of our lives, perhaps in history. The businesses that are creating generative AI models and the businesses that are going to use them will change most aspects of our society. Most of us can’t yet understand the actual consequences, good and bad, of artificial general intelligence (most AI scientists acknowledge that they can’t either).

Given these material unknowns, even a high-functioning federal government would be challenged to create and enforce regulations that can keep pace with the humans developing AI, let alone the AI itself.

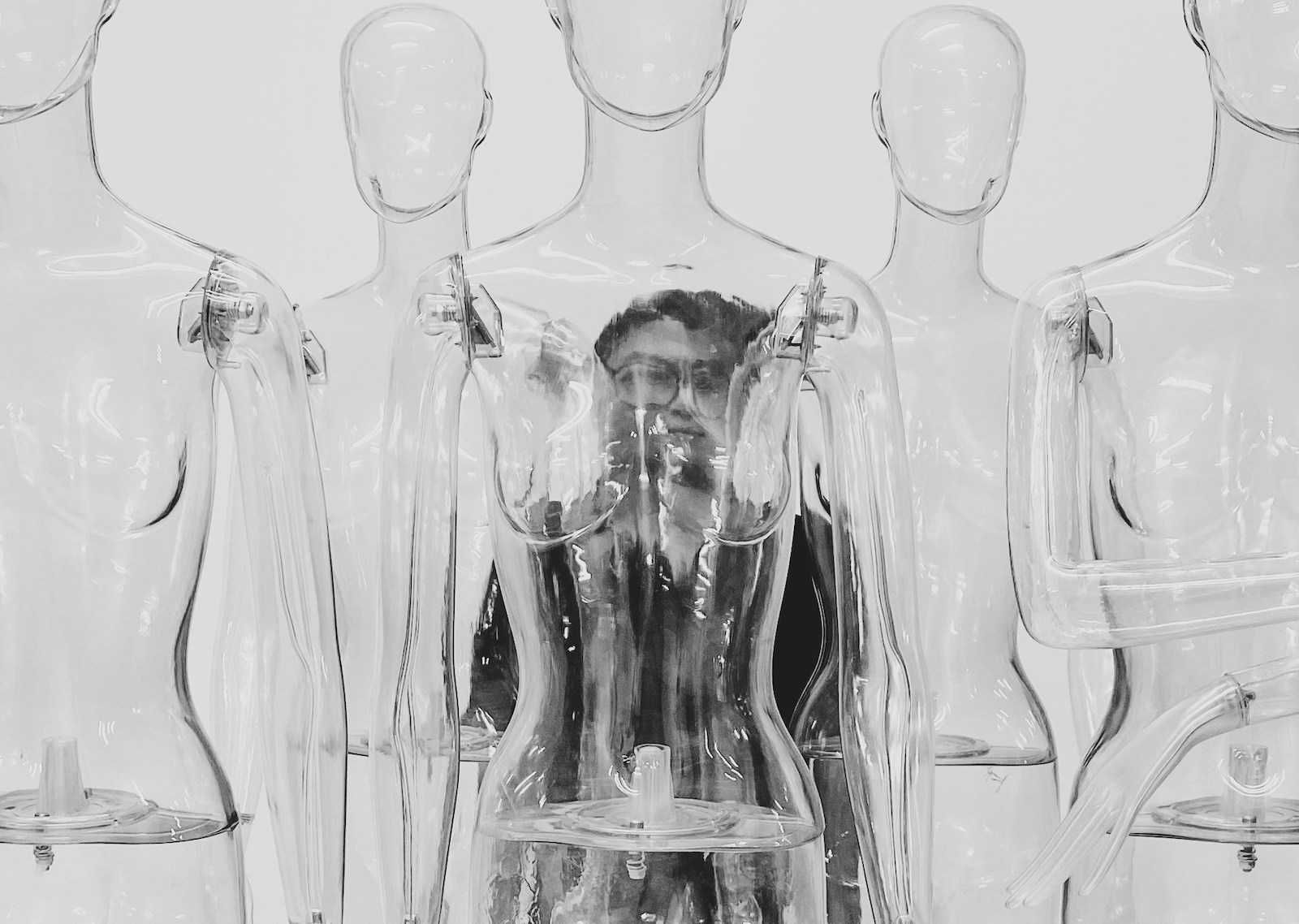

This isn’t a reason not to regulate AI. But it is a reason that we need other solutions, too. Right now, we are like spectators on Mt. Olympus as a group of self-appointed Greek gods with large and fragile egos battle it out for who’s got the better idea for how society will use AI.

These guys (and they are mostly wealthy, white men) feel entitled to decide for us. Most of them have little understanding of how the rest of us live or what we aspire to (humans being a kind of inconvenience for some of them). There is no reason to trust any of them; none have committed themselves to true accountability.

And anyway, we shouldn’t build an economic system that relies on individual heroes.

Duty of care

AI is just technology. Technology is neither good nor bad. It is a tool. Its uses are up to us as a society. If AI is going to be developed by the private sector, then society – that’s us – should think about guardrails that ought to be in place to ensure that this new technology, with humanity-shaping power, seeks to maximize the wellbeing of humanity, not to maximize the profits of shareholders.

When we started the B Corp movement, we had three simple ideas about how business could have a positive impact on society – performance, accountability, and transparency. We put all three of these elements together in a certification because we didn’t believe that any one of them, alone, could ensure that business has a positive impact. Today, over 8,000 companies in 96 countries are certified B Corps and hundreds of thousands of companies are using B Corp social and environmental performance standards and/or benefit corporation governance to operate their businesses for positive impact.

If we apply these principles to AI, with some additional guardrails, there is a better chance that AI can actually meet its promise to benefit humanity. Here’s a short list of what we should demand from the companies developing AI today:

- Accountability. Every AI company should be required to become a benefit corporation, with an upgraded fiduciary duty that makes it accountable to consider its impact on all stakeholders, not just shareholders.

Arguably, given the heightened risk, they should go further with some governance belts and suspenders. One idea is to use the benefit corporation structure in conjunction with a purpose trust (similar to what Patagonia recently did). A class of shares or voting control would be held by a trust who had, in its own legal structure, an obligation to vote their shares according to the public interest. Several of the AI companies already are public benefit corporations, but if there aren’t shareholders willing to hold them accountable, then the legal structure, alone, is insufficient. The purpose trust would help address that problem. Anthropic has gone further than most and added what they call a “long term benefit trust” alongside the benefit corporation structure to create better mission alignment.

Benefit governance alone is insufficient. Without performance standards and transparency, the rest of us are still relying on the company and its investors. Since that track record is spotty at best, we need more. - Performance. In many businesses, it’s possible to measure the impact of the business on society. But AI is full of unknowns. In addition to answering questions about the impact of an AI platform on its users, workers, suppliers, communities, and the environment, AI developers should be required to address their business models, how their products can be used and by whom, living by a set of standards that are developed by independent stakeholders, not by themselves.

We cannot rely on standards developed by individual companies or the AI industry because of the inherent conflicts of interest and the ease with which they can be abandoned or altered when things get hard (e.g. Microsoft shut down its Office of Responsible AI “Aether” team in January 2023 as part of a round of layoffs). There are clear best practices for developing and evolving credible, independent, comprehensive, transparent, third party performance standards – AI ought to be subject to them.

In addition, there should be a requirement that the underlying source code contain directions that the AI cannot do harm to humans, or to the natural and social systems on which we humans depend. How to attain such “alignment” is a hot topic in AI research. Let’s make “Don’t be evil” an actual enforceable standard duty of care built into the source code of AI, not just some words in a corporate code of conduct. - Transparency. Elon Musk is right about at least one thing. Without transparency of the code, there will simply be no way to know how general AI platforms can be used and for what. Even with a commitment to accountable governance and high standards, the public can never know without transparency. Every developer of an AI platform must be required to provide total transparency of their code.

Systems change begins with individuals — that’s you and me — deciding that we want the system to be different than it is. AI presents the opportunity to apply the principles of stakeholder capitalism to what may be the most consequential business decisions of our, or all, time.

Instead of hoping that Elon Musk turns out to be our savior, not our destroyer, let’s create the performance standards, public transparency, and accountable governance of AI to ensure that it actually benefits us all.

Andrew Kassoy is co-founder and co-chair of B Lab Global, the not-for-profit behind the B Corp movement.